-

I’m terrified of getting a job 🙁 Please help

I’m 19 years old and I’ve never had a job before. I need one so badly and there are places in my town that I know hire on the spot but even then I feel like i really really cant do it. Even if i get past the application and interview portion I still feel so lost and terrified of what comes next. I won’t know my boss, i’ll have to talk with coworkers i dont know, ill have to talk with customers, troubleshoot customer problems on my own, and have the responsibility of running things on my own. I’m so stressed out and I can’t talk about these issues or ask for help with anyone i know irl. Please give me some advice, tips, guidance, or a list of what to do !!! -

They Come To Snuff The Hamster

Rescuers got a guinea pig from under the rubble of a destroyed house in #Mykolaiv.

You won't see anything nicer today. pic.twitter.com/s6QzerSnkX

— NEXTA (@nexta_tv) November 11, 2022

=1 Comment -

Casino

Recommend a good casino guide. -

Let’s talk Mental Health…and Glastonbury.

I’m sure anyone that has been knows that magical force field that seems to contain Glastonbury and allows you to largely forget the outside world. I struggle to imagine feeling unhappy whilst on the farm.

But, I am curious whether people have at a time when they’ve been struggling with their mental health and what impact being there has had? Maybe it has had a longer term positive impact? Or maybe a week of indulgence was, in retrospect, not such a good idea? Whilst you were there you felt totally free and that in itself was wonderful?

Without going into great detail, have been struggling of late and, although I am feeling quite a bit better than I was weeks ago, I am still finding days a bit of a battle. Was hoping on the final leg toward the festival to be feeling much better. Anyway, my hope is to be there and forget all my problems, have fun galore, and not being waking up feeling like crap! (Except maybe in a hangover type way).

Always like to encourage talk of MH (good to talk, blah blah, etc.), especially as one day I hope to make it my profession (training as a counsellor).

1 Comment-

It’s wonderful to see you addressing the important topic of mental health, Aggy45. Glastonbury does have a magical way of enveloping you in its unique atmosphere, offering an escape from the outside world.

Many people have found solace and temporary respite from their mental health struggles while at festivals like Glastonbury. The sense of freedom, joy, and the community spirit can be incredibly uplifting.

Remember, it’s okay to have moments of struggle, and seeking help or talking about mental health is a courageous step. As you pursue a career in counseling, your own experiences will undoubtedly add depth and empathy to your ability to help others.

If you ever need resources or support for your own mental health journey, you can explore this https://www.mentalhealth.com/therapy/family-therapy for valuable information.

-

-

Dual socket EPYC 9654 + Windows Workstation benchmarks on COMSOL, etc

I recently built a dual-socket workstation based on 96-core EPYC 9654 for my research. This post is to share some benchmark results from this system with those interested in the performance of many-core CPUs, like Threadripper 7995wx, running on Windows.Most of the benchmarks have been done on COMSOL, and for easy comparison with other systems, I used the benchmark files shared by twin_savage. For the files, you can check out the following post:

https://forum.level1techs.com/t/workstation-for-monte-carlo-simulations/203113/10

6. Thank you twin_savage for allowing me to use the files for the benchmarks and to share the results in this post.Here are the specs of the system

Dual-socket EPYC 9654 system

CPU: AMD EPYC 9654 (96 cores) 2S – HT off, all core turbo: 3.55 GHz

M/B: Gigabyte MZ73-LM0 rev 2.0

Memory: Samsung M321R8GA0BB0-CQKZJ (DDR5 64GB PC5-38400 ECC-REG) 24EA (2 x 12ch, 1.5TB in total)

GPU: Nvidia Geforce G710 🙂

PSU: Micronics ASTRO II GD 1650W 80Plus Gold

OS: Windows 10 Pro for Workstations (fTPM is not included in the MB so Windows 11 is not available yet)

SSD: Samsung 990pro 2TB + 860evo 4TB 2EAThe following are quick specs of three systems used for comparisons

AMD R9 5950x system

CPU: AMD Ryzen R9 5950x (16 cores) – HT on, all core turbo: ~4.3 GHz

Memory: DDR4 32GB PC4-25600 4EA (128 GB)

OS: Windows 10 Pro for WorkstationsIntel i9 7920x system

CPU: Intel Core-i9 7920x (12 cores) – HT on, all core turbo: 3.7 GHz

Memory: DDR4 32GB PC4-25600 8EA (256 GB)

OS: Windows 11 Pro for WorkstationsAMD Threadripper 5995wx system

CPU: AMD Ryzen Threadripper 5995wx (64 cores) – HT off, all core turbo: ~3.8 GHz

Memory: DDR4 128GB PC4-25600 ECC-REG 8EA (1 TB)

OS: Windows 11 Pro for Workstations++++++++++++++++++++++++++++++++++++++++

0. NUMA in Windows 10 Pro for WorkstationsBy default, Windows splits 192 cores into 4 NUMA nodes: 64 + 32 + 64 +32. The MB provides a specific NUMA per Socket (NPS) option in bios to control, but the 4 NUMA splitting overrides the NPS=1 in bios. Presumably, this is due to the 64-core threshold of the Windows processor group. NPS=1 works when the CPU is 64 cores or under per socket.

In the default, 3 processor groups form: 64 + 64 + (32+32) like the following figure.

NUMA node and prcessor groups

NUMA node and prcessor groups1179×533 79.8 KBIf NPS=2 is set, then the 192 cores become split into 48+48+48+48. The number of cores per node becomes symmetric, but the N of processor groups increases to 4. More importantly, the directly accessible memory channel becomes asymmetric, which can impact significantly on the overall performance. I don’t think NPS=2 is useful for 96c CPU.

NPS=4 splits the 192 cores into 8 x 24-core nodes. Symmetric N of cores, asymmetric memory channels in a node, and 4 processor groups as 4 x (24+24).

As pointed out by wendell in the following post,

https://forum.level1techs.com/t/windows-doing-dumb-stuff-on-big-systems/204408

1, NPS=3 would be the best option for 96c/192c system because it brings symmetric configurations of cores and memory channels with reduced 3 processor groups. Unfortunately, this option is not available in this system.1. COMSOL: default run (NPS=auto)

Just ran the benchmark with no additional options. Here we go:

9654×2

9654×21130×901 131 KB10 hours for the 50GB benchmark!! Comparisons with other systems are here.

default_run

default_run1088×288 64.8 KBThe reference Xeon W5-3435x result is by twin_savage as in the post above.

The 200GB bench is a memory-bandwidth-thirsty one, while the 50GB bench stresses CPU more than the memory bandwidth.

The default run uses all available physical CPU cores: all 192 cores are used during the benchmark. But for the 50GB benchmark, EPYC 9654 2S is the slowest system :smile:.

2. COMSOL: CCD control

Maybe the bizarre results are related to the Windows scheduler, which is not that smart, and/or the interconnection between the CCDs in AMD CPUs that yields high latency for the CCD communication. Because both can be relieved by reducing the number of cores, I disabled several CCDs via bios and ran the benchmark again. The results are

ccd_control

ccd_control1728×245 68.8 KBYeh! It works. Turning on only 2 CCDs out of 12 CCDs gave the best result for the 50GB benchmark, even though the directly accessible memory channel was reduced to 6 per socket. The impact is more pronounced in the 50GB benchmark, and the 200GB benchmark also got a mild speedup. But overall, the results are not impressive especially when compared with the reference Xeon results above.

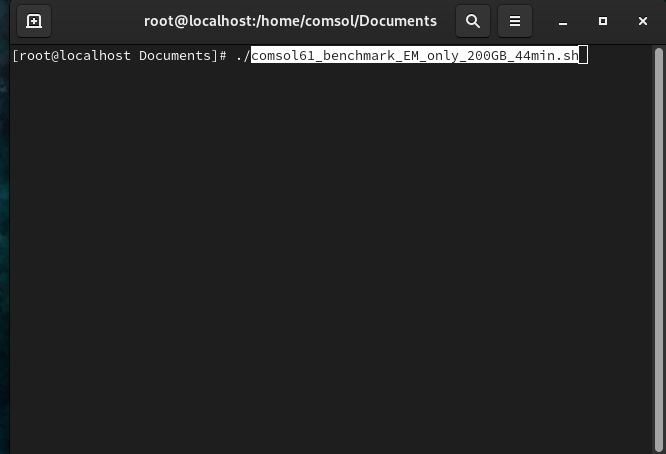

3. COMSOL: -np option

COMSOL provides -np N option that specifies N cores to be used in the calculation. For instance, comsol61_benchmark_50GB_windows.exe -np 16 can be used to utilize only 16 cores.

Turning on all 12 CCDs, -np option was used here. The results are

np_option

np_option1372×269 68.4 KBUnfortunately, two results are missing (Update: result with -np 64 for 50GB bench has been added on Jan. 3rd, 2024). Hard to talk about the trend specifically, but it is true that -np option is very helpful. The lower performance on -np 16 seems to be due to the limited direct access to the memory.

Because -np 32 uses only half the core resource of a 64-core NUMA node, I ran two simulations simultaneously to see the impact of multitasking and the potential productivity of the system. Exemplary results are as follows:simultaneous_run

simultaneous_run1741×147 46.7 KBAs expected, two simultaneous 50GB benchmarks have been finished in about 4h 46m, meaning that the productivity is almost doubled.

But one thing I would like to stress here is that the Windows scheduler is not that smart to allocate multiple jobs to NUMA nodes in a better way. For instance, if the first job with np 32 is running on NUMA node 0 (64c), and if I add a second job, then the scheduler locates the second one in the same node 0. This is not the best way in terms of the utilization of memory bandwidth.

But anyway, there were 32 available seats so it is okay. Now, no empty seats in node 0 but lots of empty seats in node 1, 2, and 3. And adding a third job? Guess what? The scheduler adds the third one on the SAME NUMA NODE 0. The scheduler refuses to see the empty seats in node 1,2,3. I don’t why, and to tell you the truth, I’m not sure whether this bizarre allocation is the problem of the Windows scheduler, or “NUMA-aware” COMSOL is responsible for this. I don’t know.

In short, as far as I know, there is no way to utilize all 192 cores on Windows by using the “np N” option with N less than 192.4. COMSOL: NPS=N option

This benchmark is also incomplete. Ran only for 200GB benchmark, and here are summary.

NPS_option

NPS_option1692×229 67.9 KBPresumably, NPS=4 reduces unnecessary communication between all 12 CCDs by making the boundary of a node within 3 CCDs. My theory is that this would be helpful to reduce cores’ waiting time caused by the long latency of the Infinity Fabric.

The best result for 200GB bench so far is obtained by using NPS=4 together with -np 965. FDTD: default run (NPS=auto)

The finite-difference time-domain (FDTD) is a popular algorithm for the simulation of electrodynamics. It solves Maxwell’s two curl equations numerically. At every step, all spatial data of electromagnetic fields, allocated in memory, are read and updated, making FDTD memory-bandwidth-intensive. But the complexity of computation is relatively low compared to other algorithms like FEM.

There are commercial programs for FDTD, such as Lumerical FDTD, but I’m currently using my homemade one, written in C++ and utilizing OpenMP and SIMD.The following is a summary of the FDTD benchmark.

FDTD

FDTD1593×214 49 KBNothing to say but the 2S 9654 is just INSANE on FDTD.

Conclusion

Running COMSOL on a big system on Windows is very tricky. The abnormal performance might be due to Windows’ premature scheduler and/or a unique topology of AMD CPUs involving long latency for communication between CCDs.If results from Linux were available, it would be more specific to say which one is responsible for the low performance. Running on Windows Server 2022 would give different results, but unfortunately, I don’t have a license for this.

In terms of the 12 x 8c topology of the CPU and its impact on the performance, a comparison with a monolithic chip like Intel’s MCC-based Saphire Rapids or upcoming 2 x MCM Emerald Rapids like Xeon 8592+ (64c, 2 x 32c) would be helpful.

Overall, I feel that Windows is not yet capable of handling a big system. A 64c CPU would be a better choice than a 96c one to run on Windows, in terms of NUMA, Windows processor groups, and consequent computational efficiencies.

++++++

Small updates on Jan. 3rd, 2024:A figure for NUMA nodes and Windows processor groups has been added

A result with -np 64 for the 50 GB bench has been added1 Comment-

It’s clear that running COMSOL on a system of this scale in a Windows environment presents some challenges, particularly due to the complex CPU topology and communication latency between CCDs. Your experiments with reducing CCDs and exploring options like -np and NPS=N shed light on potential optimizations.

For those interested in exploring more about quantum technology, here’s a related article: quantumai.cor. Keep up the excellent work, and thanks for sharing your findings with the community!

-

- Load More Posts

Register Now For Full Access

Members get unlimited access to Hive80. Ask questions, follow topics, join hives, and be a part of the community!

GAM #1

Browse our A-Z Company Spotlight List

GAM #2

Explore Hives

-

Active 6 months, 1 week ago

-

Active 6 months, 1 week ago

-

Active 6 months, 1 week ago

-

Active 6 months, 1 week ago

-

Active 6 months, 1 week ago

For those looking to give their pets the best care possible, I stumbled upon some excellent tips at https://hamsternow.com/hamster-care. It’s amazing how much love and care we can provide for our furry friends.